How can we ensure that self-driving vehicles (SDVs) are truly safe? Traditional safety analysis often misses critical feedback loops and self-regulatory mechanisms that nature takes for granted. Here we demonstrate how dialectical analysis can reveal these hidden requirements by examining both SDV operation and city-wide adoption cycles. Our method exposes crucial safety factors that typical engineering approaches might overlook, particularly in the system’s ability to self-correct when things go wrong.

SDV Operating Cycle

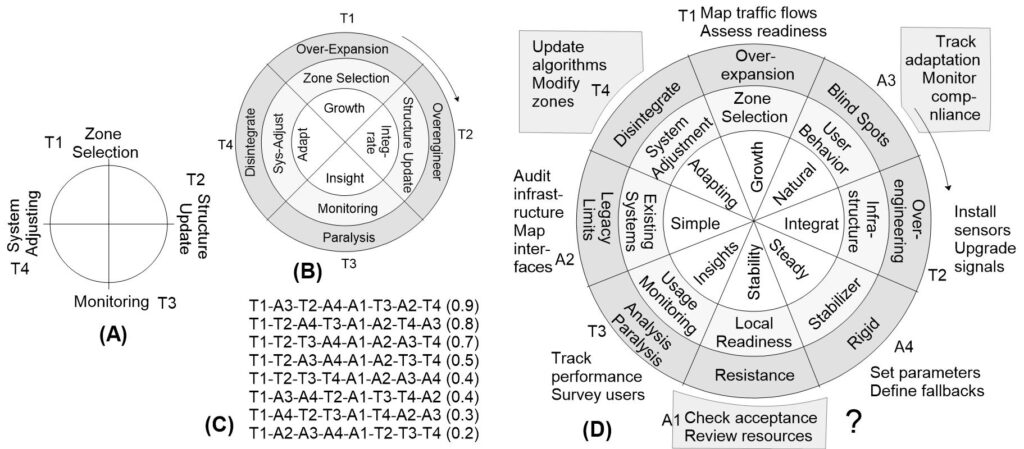

The basic operation of a self-driving vehicle follows four steps: Data Gathering – Data Processing – Decision Making – Acting/Executing (see Scheme A below).

However, from a dialectical perspective, a system becomes truly self-organizing only when each basic step is complemented by its control factor:

- Data gathering (T1) — Data Validation (A1)

- Data processing (T2) — Data clearance (A2)

- Decision making (T3) — Risk Assessment (A3)

- Acting/Executing (T4) — Control/Stability check (A4)

These control factors emerge from analysis of positive and negative aspects of each original step (Scheme B). Each control factor must be placed diagonally from its corresponding basic step – a fundamental requirement often overlooked by conventional design approaches.

This requirement increases the number of steps from 4 to 8, yielding only 8 possible causality sequences (Scheme C). Scheme D shows the most likely sequence of steps with potentially missing elements in current SDV designs:

- Risk assessment following object recognition (A3)

- Control/stability verification after risk assessment (A4)

- Original data re-evaluation based on A3-A4 steps, with potential return to T2 (akin to OODA loop) (A1)

- System readiness refresh and buffer clearance before decision-making (A2)

These gaps, shown in grey zones in Scheme D, represent blind spots in current linear/rational approaches to SDV safety.

SDV Adoption Cycle

While operating safety focuses on individual vehicles, adoption safety requires analyzing city-wide implementation. A city must select testing zones, update infrastructure, install monitoring systems, and adjust to real-world conditions. This process too can be examined through dialectical analysis.:

The basic cycle appears simple: Zone Selection (T1) – Structure Update (T2) – Monitoring (T3) – System Adjustment (T4). However, our dialectical wheel reveals critical oversight zones (shown in grey in Scheme D):

- Track user behavior (A3)

- Check local readiness after implementing fallback thresholds (A1)

- System adjustment following assessment of unaffected areas (T4)

These “missing steps” raise important questions: If they seem unnecessary, perhaps our initial definitions need refinement. If they seem essential, they must be incorporated into adoption plans. Just as individual SDVs need safety redundancy, city-wide adoption requires comprehensive feedback mechanisms that conventional planning might overlook.

Conclusion

This analysis demonstrates how our method can expose critical vulnerabilities in complex artificial systems. Living systems achieve safety through homeostatic adaptation – their high degree of internal interconnection allows them to self-repair and adapt. In contrast, artificial systems, built mechanically rather than grown organically, have far fewer internal connections. This makes them vulnerable to catastrophic failures even from minor malfunctions.

Our analysis of both SDV operation and city-wide adoption reveals this fundamental weakness. While conventional safety analysis focuses on individual components and linear processes, dialectical wheels expose crucial feedback loops and control mechanisms that are often overlooked. The “grey zones” we identified represent connections that could provide essential self-regulatory capabilities.

The implications extend beyond self-driving vehicles to any complex artificial system. Unless we find ways to incorporate organic-like interconnectivity and self-regulation, our technological systems will remain fundamentally fragile despite their sophistication.