Most decision-makers assume blind spots come from “not having enough data” or “not having enough compute.”

But that’s not what blind spots are. Blind spots arise from how our minds frame the world, not from a shortage of information.

Psychologists use “blind spot” to describe any pattern of thinking that makes important information invisible, even when it’s right in front of us. So the natural question is:

How many of these blind spots could we reduce if everyone consulted AI before making important decisions?

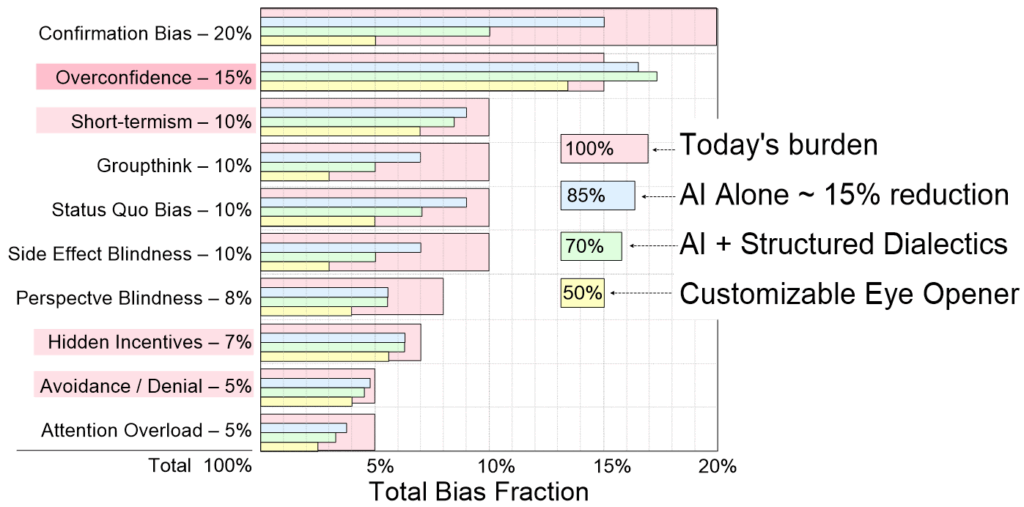

Figure 1. GPT estimates of how different tools reduce the major blind-spot types.

Here’s what the data suggests.

AI Alone Removes Only ~15%

If you ask GPT to critique your idea, you do uncover things you would normally overlook. This reduces some kinds of bias — especially confirmation bias and groupthink. But AI alone does not fix the deeper blind spots, such as overconfidence, short-termism, hidden incentives, and emotional avoidance. In fact, overconfidence can increase — because AI tends to validate your framing and make answers feel authoritative.

AI + Structured Dialectics ~30% reduction

Structured Dialectics (like the Eye Opener) adds something AI alone can’t:

it forces you to think in opposites. Every idea (thesis) must be paired with its antithesis, and both must be evaluated for upsides and downsides.

This turns decision-making into a “both-and” exploration instead of a win-lose argument.

Why it works so well:

- It exposes the hidden good in ideas we normally reject.

- It reveals the hidden bad in ideas we normally love.

- It surfaces side effects and long-term oscillations.

- It breaks the illusion that our view is the whole picture.

It’s like learning to see in 3D instead of 2D — and it cuts the blind-spot load by roughly 30%.

Customizable Eye Opener ~50% reduction

Note: The yellow “Customizable Eye Opener” is hypothetical.

These estimates show what such a tool could achieve if developed.

The breakthrough comes when users can:

- correct AI’s polarities,

- rewrite upsides and downsides,

- add missing perspectives,

- and challenge the framework itself.

This shifts decision-making from “AI told me the answer” to “AI helps me see my own thinking more clearly.” The user becomes the owner of the frame, not the follower of it.

This reduces both human blind spots and AI’s structural biases. The result is a world that is roughly half as blind as ours.

What Would a Half-Blind World Look Like?

Better personal decisions

Fewer preventable breakups, financial disasters, self-sabotaging loops.

Better governance and regulation

More “both-and,” less absolutism (see Rethinking Regulation). More complementarity, fewer ideological battles.

Better justice

Less tunnel vision. More hypothesis-testing, fewer false convictions.

Better science and medicine

Fewer “of course it’s X” mistakes. Better interpretation of evidence. More humility, less hype.

Better media

More curiosity, less polarization. More nuance, fewer enemies.

Fewer wars

This is not the end of conflict or complexity – but it’s the end of avoidable blindness.

And that alone would be revolutionary.

A Different Kind of Intelligence

Structured Dialectics doesn’t eliminate conviction or disagreement. It cultivates impeccability — the habit of checking your own vision before acting.

“The first step to wisdom is noticing how much of reality your certainty has edited out.”